Unwind Your Mind: cybersecurity Compliance Simplified.

Current Project Overview: We were asked to automate the auditing process for a cybersecurity framework that can have over 1000 questions in the audit. The average audit size is around 350 questions. The following is based on a real example of building an AI team to respond to a compliance framework. All statistics are taken from a real engagement.

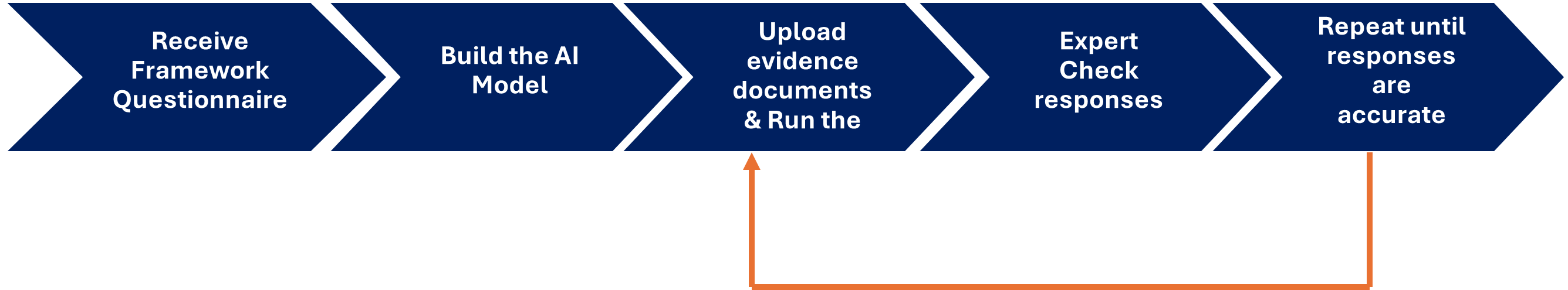

The process we adopt for developing a compliance framework auditing tool involves taking an existing audit that has been completed and certified as the reference so that results can be compared, the steps for the building process are:

Current Project Overview: We were asked to automate the auditing process for a cybersecurity framework that can have over 1000 questions in the audit. The average audit size is around 350 questions. The following is based on a real example of building an AI team to respond to a compliance framework. All statistics are taken from a real engagement.

The process we adopt for developing a compliance framework auditing tool involves taking an existing audit that has been completed and certified as the reference so that results can be compared, the steps for the building process are:

1. Receive the standard cybersecurity questionnaire that needs to be automated.

2. Build the AI model(s) to respond to the questions.

3. Run a proof of concept to test the AI responses for accuracy, using an audit that has already been completed, so that the responses from that audit can be compared with the AI models responses.

4. Engage with an expert in the chosen framework to check the accuracy of the AI responses.

5. Repeat the proof-of-concept steps and engagement with the expert until the responses are equal, or better than, the reference audit responses.

This example has a number of phases to the framework, and we were asked to address 3 phases:

Phase 1: Policy: The AI agent reads the evidence and aligns the evidence to the framework questions to the framework.

Phase 2: Process: This is the evaluation process where the results of the policies produce a gap analysis for every question in the framework.

Phase 3: Implementation: This phase scores the results of the process as to how well the process is adopted.

We enlisted the help of an authorised partner for the framework to use their expertise to help critique the AI responses to the three phases.

The number of questions for this POC was 400, however it took 9 full audits with the tuned answers resulting in answering 10,400 questions.

The experts sampled 140 random questions over several iterations, after each sample was analysed a full audit of 400 questions was run.

Traditional approach using a human team to tune the responses:

Experts:

- Karen: 10 years’ experience (B.A. in Management Information Systems) for Phase 1 and 2.

- Julie: 6 years’ experience (B.A. in Science) for Phase 3.

The experts take the results from the AI model, manually review the answers to the questions, compare them against the reference audit results and give feedback as to the accuracy of the response. As a real life exercise our experts, having other responsibilities like management responsibilities took 1.5 weeks to get 10 questions critiqued.

- Policy

- Build Initial Model

- Karen tests results: 16 hours 3 weeks, 20 questions analyzed

- Build 2nd Model: 3 weeks, 20 questions analyzed

- Karen tests results: 16 hours

- Build 3rd Model

- Karen tests results: 16 hours, 3 weeks, 20 questions analyzed

Total Expert time: 48 hours over 9 weeks

Process.

- Build Initial Model

- Julie tests results: 8 hours, 1.5 week, 20 questions analyzed

- Build 2nd Model: 3 weeks, 20 questions analyzed

- Julie tests results: 8 hours

Total Expert time: 16 hours over 4.5 weeks

- Implementation.

- Build Initial Model

- Julie tests results: 8 hours, 1.5 weeks, 20 questions analyzed

- Build 2nd Model: 3 weeks, 20 questions analyzed

- Julie tests results: 8 hours

Total Expert time: 16 hours over 4.5 weeks

Summary:

- Total Expert Time Needed: 80 hours @ $250/hr = $20,000

- Time to Implement: 18 weeks

- Questions Analyzed: 140

Using AI Team.

Experts:

- OpenAI o1-preview & OpenAI o1

Process:

- Policy

- Build Initial Model

- O1 tests results: 640 questions @ 22 hours

- Build 2nd Model

- O1 tests results: 640 questions @ 22 hours

- Build 3rd Model

- O1 tests results: 6400 questions @ 22 hours

Total AI Agent Expert Time: 66 hours & 5760 questions answered

- Process

- Build Initial Model

- O1 tests results: 320 questions @ 11 hours

- Build 2nd Model

- O1 tests results: 320 questions @ 11 hours

- Build 3rd Model

- O1 tests results: 320 questions @ 11 hours

Total AI Agent Expert Time: 33 hours & 2880 questions answered

- Implementation

- Build Initial Model

- O1 tests results: 320 questions @ 11 hours

- Build 2nd Model

- O1 tests results: 320 questions @ 11 hours

Total AI Agent Expert Time: 22 hours & 640 questions

Summary:

- Total Expert Time Needed: 88 hours @ $50/hr = $4,400

- Time to Implement: 3 weeks

- Questions Analyzed: 6400

Conclusion

Replacing human experts with AI agents for tuning cybersecurity compliance models significantly reduces both the time and cost involved and frees them up to carry out client engagements. The AI agents demonstrate the ability to handle a substantially higher volume of questions in a shorter timeframe, ultimately enhancing efficiency and effectiveness in compliance model tuning. As organizations continue to seek cost effective and efficient solutions, AI agents present a promising alternative to traditional expert driven approaches.